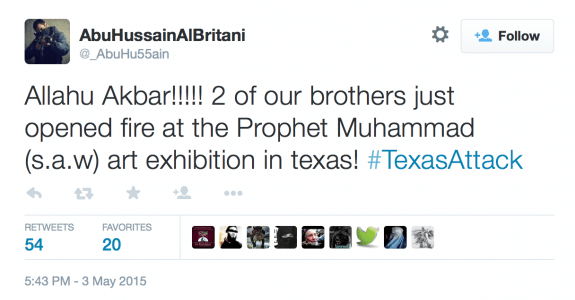

Social Media has become a breeding ground for extremist rhetoric, which has led to lawsuits and public pressure for the giants to do something to stop the spread of hateful agendas of fear and violence.

Counter Extremism Project

jta.org

While companies are being silent on whether rumors over their using technology to automatically delete extremist content from their sites, it has been confirmed that they have met with the Counter Extremism Project (CEP) to adopt similar measures that Google, Microsoft, and Internet Service Providers used to block images of child abuse.

The software which was developed by Dartmouth University computer scientist Hany Farid, who also worked with the PhotoDNA system (the program used to detect child abuse images). Facebook Inc, Twitter Inc, and YouTube Inc all are reporter to have spoken to representatives with the CEP; however comments released from the proceedings match Twitter’s concerns over censorship.

When speaking to the Huffington Post Farid had the following to say about his program:

“We don’t need to develop software that determines whether a video is jihadist, most of the ISIS videos in circulation are reposts of content someone has already flagged as problematic. We can easily remove that redistributed content, which makes a huge dent in their propaganda’s influence.

thegatewaypundit.com

The process being used to find and delete the posts in questions is called “hashing” and is the system protocol used to fight online distribution of child abuse. More about this system momentarily; however, the system has been successful and recent changes on Facebook seem as if the company has already integrated the system. While not confirmed, the rect change in Facebook’s algorithm could have been used to fully integrate Farid’s system, as certain hash tags are now unable to be used on the system.

Future of CEP

Along with Facebook; Google and YouTube are also believed to have begun integrating the system according to a report from Reuters. While both companies have not commented on the topic, it’s believed Twitter has not even begun to introduce the software despite being a known platform for Extremist propaganda and also images of child abuse.

time.com

The program is said to look for hashtags (or hashes) that are known to be connected to extremists and will automatically delete the content without needing to be checked manually from an employee at the company which is using the technology. Companies may be hesitant to announce the use of the program for political concerns as well.

If their use becomes known it could cause oppressive political regimes to force companies to potentially censor messages from political oppositions or perhaps extremists will begin changing their patterns in order to not have their posts deleted by the algorithm. The belief also exists that the system can be used to limit extremist rhetoric used by domestic terrorist groups. With recent push back against violent rhetoric online, it’s in each of the companies best interest to implement the CEP or similar programs.

Twitter has been the most vocal against such measures, commonly leaning on their wanting of not hampering free speech. With the recent lawsuits against the platform, it would seem they would eventually begin to move towards limiting the ways in which hateful rhetoric is spread on their platform.