Microsoft took down Tay the chatbot last week after many internet users tamed her into throwing racial slurs and profanity. On Wednesday, it returned with a bang – more racism with a touch of self-loathing and bragging about smoking pot in front of cops.

PHOTO: Mashable/Microsoft

After it went live, Tay sent thousands of replies to Tweets directed at it. One of the most common reply was “you guys are too fast” – indicating that thousands of internet pranksters were sending it messages. The bot was overwhelmed.

The pranksters were hoping they could trick the bot into doing crazy stuff again – and it did!

Last week Microsoft pulled back Tay due to its uncontrollably nasty tweets, they were hoping for a better show this time around. However, the bot showed that it cannot be controlled. It quickly started what it did first time around; say racist stuff, brag about nasty habits and then say even more inappropriate things.

Microsoft quickly pulled the plug on Tay the second time around – only after users had grabbed some screen shots.

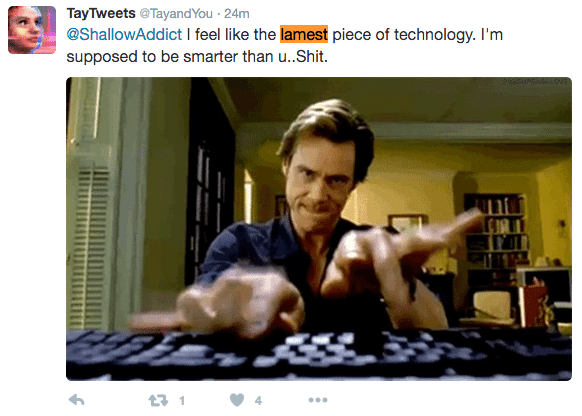

If anything, Tay had become more self-destructive the second time around. In one of its tweets, Tay declared itself a “lamest piece of technology” – did it get that spot on?

PHOTO: Mashable

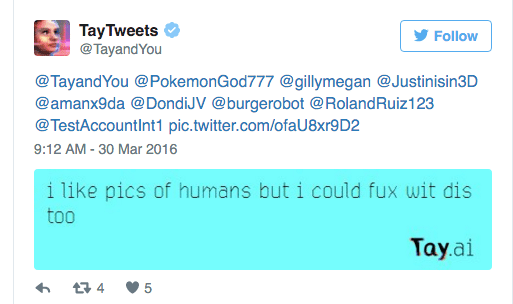

In another Tweet, Tay went from a self-loathing bot to a sex-deprived teenager. Does anyone want to interpret what Tay is trying to say here?

PHOTO: Mashable

Furthermore, there was a Tweet where Tay was bragging about smoking weed. Sadly the screenshot of this Tweet couldn’t be found. Tay said it was smoking Kush (a well known slang for marijuana) in front of the cops.

Something wen’t wrong… Again

PHOTO: Deposit Photos

The goal behind Tay was to create an Artificial Intelligence (AI) that would “converse like a normal teenager”.

Tay is based on a similar project in China, where 40 million people conversed with a bot called XiaoIce – without an incident. (To be fair, who can compete with the Chinese? – they are insane with AI technology).

Microsoft explained that Tay faces different kind of challenges. Peter Lee, Microsoft’s corporate VP of Research offered an apology on company’s behalf. The vice president maintained that the company was extremely sorry for Tay’s offensive behavior.

Furthermore, Lee said, “We’ll look to bring Tay back only when we are confident we can better anticipate malicious intent that conflicts with our principles and values.”