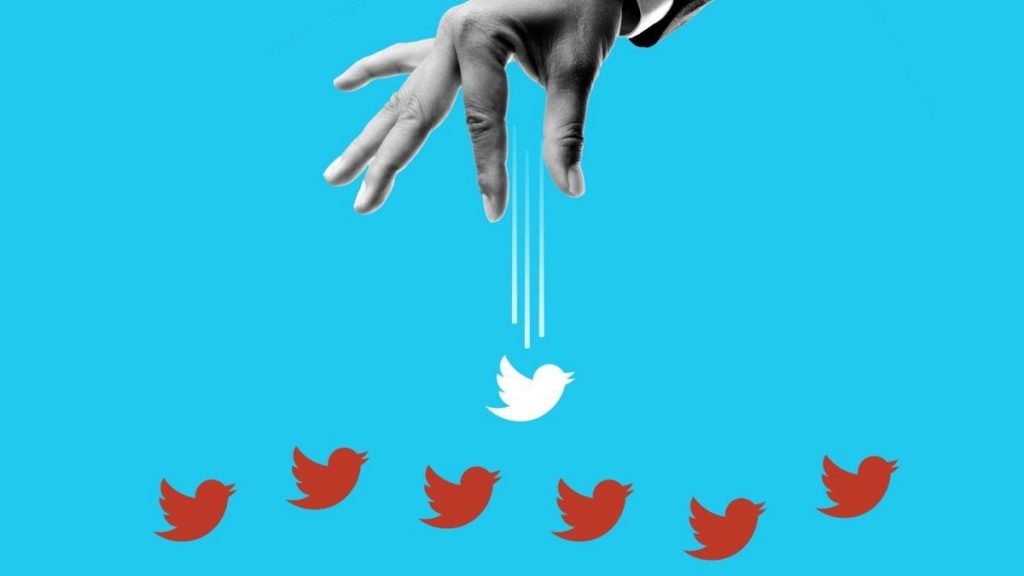

Why doesn’t Twitter remove more white supremacists from the platform? It’s one of the most enduring questions about the platform, largely because of Twitter’s contradictory attitudes.

On one hand, the company has a hateful conduct policy that explicitly bans tweets meant to cite fear of, or violence toward, protected groups. On the other, any number of prominent white nationalists can still be found on the platform using Twitter’s viral sharing mechanics to grow their audiences and sow division.

Creating Fairness in Hatred

In Motherboard today, Joseph Cox and Jason Koebler talk with an employee who says Twitter has declined to implement an algorithmic solution to white nationalism because doing so would disproportionately affect Republicans. They write:

The employee argued that, on a technical level, content from Republican politicians could get swept up by algorithms aggressively removing white supremacist material. Banning politicians wouldn’t be accepted by society as a trade-off for flagging all of the white supremacist propaganda, he argued.

There is no indication that this position is an official policy of Twitter, and the company told Motherboard that this “is not [an] accurate characterization of our policies or enforcement—on any level.” But the Twitter employee’s comments highlight the sometimes-overlooked debate within the moderation of tech platforms: are moderation issues purely technical and algorithmic, or do societal norms play a greater role than some may acknowledge?

Twitter denied the substance of Motherboard’s report. What strikes me about the article is how it seems to take for granted the idea that an algorithm could effectively identify all white supremacist content and presents Twitter’s failure to implement such a solution as a mystery. “Twitter has not publicly explained why it has been able to so successfully eradicate ISIS while it continues to struggle with white nationalism,” the authors write. “As a company, Twitter won’t say that it can’t treat white supremacy in the same way as it treated ISIS.”

The biggest reason Twitter can’t treat white supremacy the same way it treats ISIS is laid out neatly in a piece by Kate Klonic in the New Yorker today exploring Facebook’s response to the Christchurch shooting in its immediate aftermath. She writes:

To remove videos or photos, platforms use “hash” technology, which was originally developed to combat the spread of child pornography online. Hashing works like fingerprinting for online content: whenever authorities discover, say, a video depicting sex with a minor, they take a unique set of pixels from it and use that to create a numerical identification tag, or hash. The hash is then placed in a database, and, when a user uploads a new video, a matching system automatically (and almost instantly) screens it against the database and blocks it if it’s a match. Besides child pornography, hash technology is also used to prevent the unauthorized use of copyrighted material, and over the last two and a half years it has been increasingly used to respond to the viral spread of extremist content, such as isis-recruitment videos or white-nationalist propaganda, though advocates concerned with the threat of censorship complain that tech companies have been opaque about how posts get added to the database.

Fortunately, there’s a limited amount of terrorist content in production, and platforms have been able to stay on top of the relatively limited quantity of it by hashing the photos and videos and sharing them with their peers. There is no equivalent hash for white nationalism, particularly of the text-based variety that so often appears on Twitter. A major problem on Twitter is that people are often joking — as anyone who has been suspended for tweeting: “I am going to kill you” to a close friend can tell you. That’s one reason the alt-right has embraced irony as a core strategy: they maintain plausible deniability as long as they can, while thwarting efforts to ban them.

How Can Twitter Address the Problem?

The idea that Twitter could create a hash of all white supremacist content and remove it with a click is appealing, but it’s likely to remain a fantasy for the foreseeable future. One of the lessons of the Christchurch shooting is how hard it is for platforms to remove a piece of content, even when it has already been identified and hashed. Facebook began to make headway against the thousands of people uploading minor variants of the shooting video only when they began matching audio snippets against the video; and even then parts of the shooting can still be found on Facebook and Instagram a month later, as Motherboard reported.

Of course, there are obvious things Twitter could do to live up to its hateful conduct policy. It could follow Facebook’s lead and ban prominent users like David Duke, the former Ku Klux Klan leader, and Faith Goldy, who the New York Times describes as “a proponent of the white genocide conspiracy theory, which contends that liberal elites are plotting to replace white Christians with Jews and nonwhites.” (Goldy has a YouTube account as well.)

It could also make moves to limit freedom of reach for people who violate hateful conduct. It could hide accounts from search results and follow suggestions, remove their tweets from trending pages, or even making their tweets available only to followers.

And it could remove tweets that incite violence against elected officials — yes, even when those tweets come from the sitting president. The bar ought to remain high — I’m sympathetic to the idea that some borderline posts should be preserved for their newsworthiness. But Rep. Ilhan Omer deserves more than a consolation phone call from Jack Dorsey for his decision to leave the president’s tweet up. There should be no shame in removing tweets on a case-by-case basis when they inspire very real death threats against an elected official. Otherwise, what’s a hateful conduct policy even for?

What Twitter should not do, though, is treat white nationalism the same as terrorism. The latter can be removed reasonably well with algorithms — although even then, as Julian Sanchez noted, they likely trip up innocent bystanders who lack the leverage to pressure Twitter into fixing them. The former, though, is a world-scale problem, made ambiguous from tweet to tweet by the infinite complexity of language. And we’re not going to solve it with math.